2025 was the year of the Large Language Model (LLM). But as we settle into 2026, the industry’s focus has shifted decisively. We are no longer satisfied with AI that just reads; we demand AI that sees.

Vision-Language Models (VLMs) are the new frontier. These are the models that power agents capable of navigating software interfaces, analyzing medical X-rays, or guiding a robot through a messy warehouse. But for most engineering teams and researchers, there is a massive, unspoken barrier standing between them and this multimodal future: Infrastructure.

If you have ever tried to fine-tune a massive VLM using Reinforcement Learning (RL), you know the pain. The distributed training loops break, CUDA runs out of memory (OOM) the moment you introduce image tokens, and the cost of GPU clusters skyrockets.

This is where MinT (Mind Lab Toolkit) comes in. MinT is not just another library; it is an infrastructure designed specifically for “Experiential Intelligence”—helping agents learn from real-world feedback.

In this guide, we will explore how MinT solves the engineering nightmare of VLM training, specifically focusing on how you can train state-of-the-art models like Qwen3-VL without needing a budget the size of a small country.

The Problem: Why Training VLMs is So Hard

To understand the solution, we first have to respect the problem. Training a standard text-based LLM is already difficult. You have to manage tokenization, sequence lengths, and attention masks.

Now, imagine adding high-resolution images to that mix.

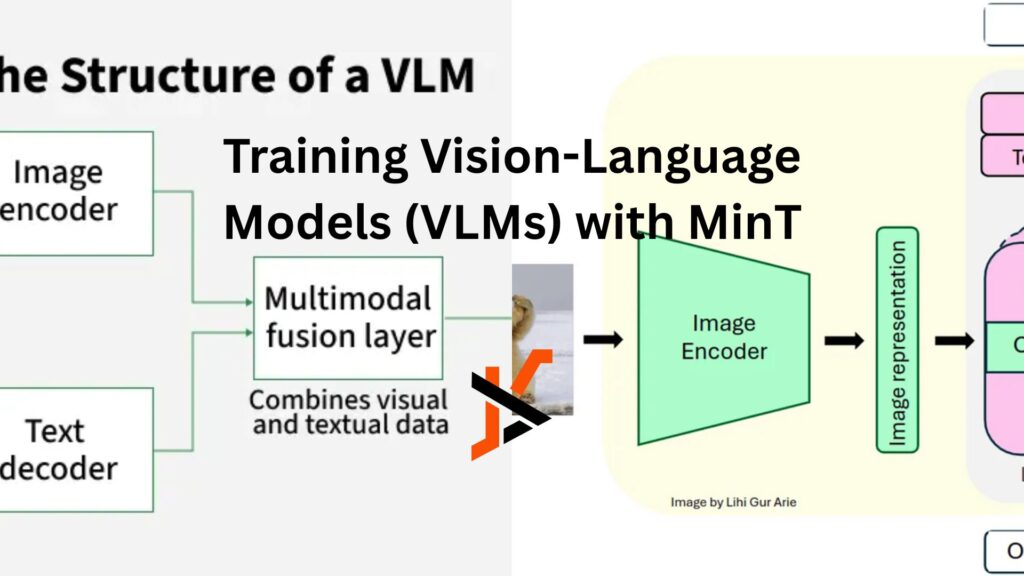

When you train a Vision-Language Model, you aren’t just processing text; you are processing visual encoders that map pixels to embedding spaces. This creates two specific headaches for engineers:

- Infrastructure Complexity: Most existing RLHF (Reinforcement Learning from Human Feedback) pipelines were hard-coded for text. Adapting them to handle image inputs often requires rewriting data loaders and hacking together distributed rollout logic that is fragile and prone to crashing.

- The “Compute Wall”: Visual data is heavy. Fine-tuning a multi-billion parameter model with visual inputs consumes massive amounts of VRAM. For many startups and labs, the hardware requirements alone make VLM research a non-starter.

This is the exact bottleneck MinT was built to break.

Enter MinT: The Infrastructure for “Seeing” Agents

MinT (Mind Lab Toolkit) acts as an abstraction layer. In software engineering, we love abstractions because they hide messy details. MinT does this for Reinforcement Learning. It abstracts away compute scheduling, distributed rollout, and training orchestration so you can focus on the algorithm, not the plumbing.

What makes MinT particularly special for 2026 is its native support for Vision-Language Models.

According to the official documentation, MinT supports a wide lineup of models, including Kimi, DeepSeek, Qwen, and crucially, Qwen3-VL. This means the framework isn’t just “multimodal-compatible”; it treats vision models as first-class citizens.

With MinT, you don’t need to write separate pipelines for text and vision. You gain a unified and reproducible way to run reinforcement learning across multiple models and tasks. Whether your agent is learning to write poetry or learning to interpret a security camera feed, the workflow remains consistent.

The Secret Sauce: LoRA RL Efficiency

Perhaps the most compelling reason to use MinT is cost.

Mind Lab, the research team behind MinT, recently headlines with a stunning statistic: they achieved high-performance reinforcement on a 1-trillion-parameter model using only 10% of typical GPU budget.

How is this possible? The answer lies in LoRA (Low-Rank Adaptation).

LoRA is a technique that freezes the main model weights and only trains a tiny “adapter” layer. While LoRA is common in supervised fine-tuning, applying it to Reinforcement Learning (LoRA RL) has historically been unstable and difficult to tune.

MinT places specific emphasis on making LoRA RL simple, stable, and efficient. The toolkit handles the mathematical complexities of gradient accumulation and stability adjustments under the hood.

For a VLM project, this is a game-changer. It means you can take a massive model like Qwen3-VL and fine-tune it on consumer-grade or mid-tier enterprise GPUs, rather than renting an entire H100 cluster. You get the intelligence of a frontier model with the training cost of a much smaller one.

A Conceptual “How-To”: The MinT Workflow

So, what does it actually look like to use MinT? The philosophy of the toolkit is that you should only have to define four things:

- What to train: Select your base model (e.g., Qwen3-VL).

- What data to learn from: Your multimodal dataset (images + prompts).

- How to optimize: Your reward function (RLHF or direct feedback).

- How to evaluate: Your benchmarks.

MinT handles the rest.

In your code, you interact with high-level API functions. For example, instead of manually managing distributed gradients across GPUs, you use:

- forward_backward: This function computes and accumulates gradients automatically, handling the distributed complexity for you.

- optim_step: This updates the model parameters efficiently.

- sample: This generates outputs from your trained model to test performance in real-time.

This “DevOps for RL” approach allows researchers to iterate faster. You aren’t debugging CUDA errors; you are debugging your reward function.

Real-World Impact: Why Experiential Intelligence Matters

Why go through the trouble of RL training for VLMs? Why not just prompt the model?

The answer lies in Mind Lab’s core philosophy: “Real intelligence learns from real experience”.

Pre-trained models are like students who have read every textbook in the world but have never stepped outside. They can describe an apple, but they might struggle to pick one up. By using MinT to run Reinforcement Learning loops, you are allowing your VLM to “experience” tasks.

Imagine an AI agent designed to browse the web and book flights. A pre-trained VLM might get confused by a pop-up ad or a dynamic layout. But if you train it with RL using MinT, the model learns from the experience of success and failure. It learns that clicking the “X” removes the ad and leads to the reward (booking the flight).

MinT enables Scaled Agentic RL, allowing models to capture and utilize experience across longer interaction horizons. This is the difference between a demo that looks cool and a product that actually works.

Conclusion: The Post-Training Era is Here

We are entering the “Post-Training Era.” The competitive advantage is no longer just about who has the biggest pre-trained model, but who can most effectively adapt that model to specific, complex tasks.

For Vision-Language Models, the barriers of cost and complexity have been daunting. But with tools like MinT, those barriers are crumbling. By abstracting away the infrastructure and optimizing for LoRA efficiency, MinT is democratizing access to frontier-level AI research.

Whether you are a startup founder looking to build a visual agent on a budget, or a researcher trying to publish the next big paper, the message is clear: You don’t need a thousand GPUs. You just need the right toolkit.