Organizations are continuously striving to harness the power of their data for insights and decision-making. However, the journey from raw data to actionable intelligence often requires well-designed and meticulously optimized data pipelines. These pipelines are the backbone of any data ecosystem, facilitating the smooth flow of information from source to destination. To achieve enhanced efficiency and scalability, it is essential to adopt best practices that streamline processes, reduce bottlenecks, and ensure reliability. Here are some proven strategies for optimizing your data pipelines

Assess Your Current Pipeline Architecture

Before diving into optimization techniques, it’s crucial to assess the current state of your data pipeline architecture. This includes identifying existing workflows, data sources, transformation processes, and destinations. Understanding how data moves through your system can reveal inefficiencies, redundancies, and challenges. By mapping out the current architecture, organizations can pinpoint areas that require improvement, facilitating a clear path towards optimization.

Embrace Scalability from the Start

Scalability should be a fundamental design principle when creating a data pipeline. As data volumes grow and analytical demands increase, it is essential that your pipeline can handle the load without significant re-engineering. Choose technologies and architectures that are inherently scalable. For instance, cloud-based solutions often provide elastic scalability options, allowing resources to expand or contract based on demand. This flexibility ensures that your pipeline can adapt to changing data landscapes without compromising performance.

Implement Modular Design

A modular design approach is vital for creating efficient and maintainable data pipelines. Breaking down the pipeline into smaller, reusable components allows for easy updates, testing, and troubleshooting. Each module can be individually optimized for performance, enabling teams to focus on specific areas without disrupting the entire system. By adopting a microservices architecture, organizations can deploy changes incrementally and maintain operational continuity.

Optimize Data Storage Solutions

Data storage solutions significantly impact the efficiency of data pipelines. Choosing the right storage technology is crucial for optimizing read/write operations and data retrieval times. For example, NoSQL databases can be advantageous for handling unstructured data, while columnar storage formats are often ideal for analytical workloads. Additionally, employing data warehousing solutions that support parallel processing can enhance query performance and improve overall user experience.

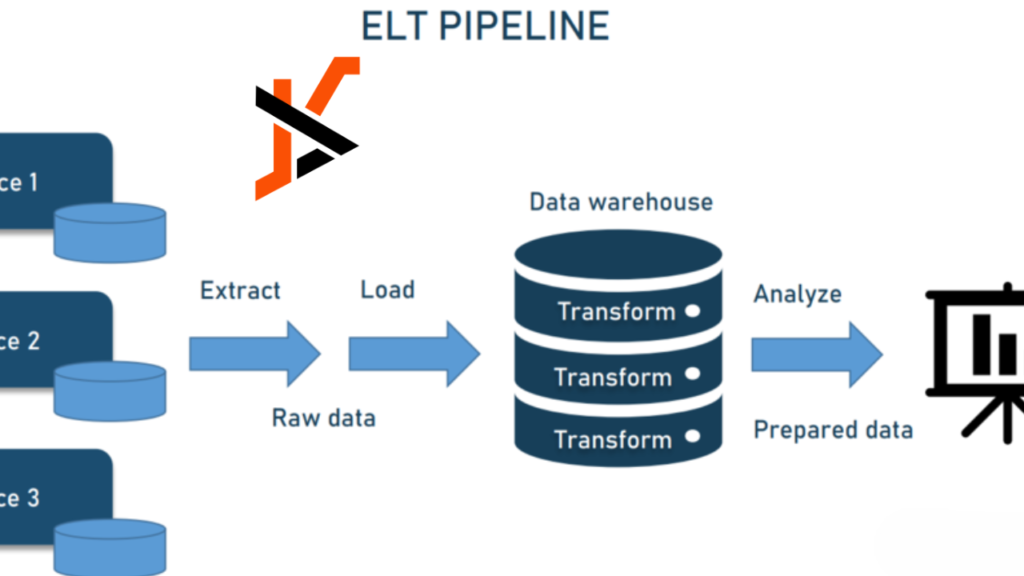

Streamline Data Transformation Processes

Data transformation is a critical stage in the data pipeline, often responsible for the majority of processing time. To optimize this stage, organizations should consider implementing data transformation frameworks that allow for batch processing, stream processing, or a combination of both. Tools like Apache Spark or Apache Flink can significantly accelerate transformation processes by leveraging distributed computing. Moreover, ensuring that transformations are performed as close to the data source as possible can minimize latency and improve efficiency.

Monitor and Measure Performance

Continuous monitoring is essential for maintaining an optimized data pipeline. By collecting metrics on throughput, latency, and error rates, organizations can gain valuable insights into pipeline performance. Employing monitoring tools and dashboards can help identify bottlenecks in real-time, enabling proactive troubleshooting and optimization. Regular performance reviews should be conducted to assess the effectiveness of recent changes and to identify new opportunities for improvement.

Automate Where Possible

Automation can significantly enhance the efficiency of data pipelines by reducing manual intervention and minimizing human error. Employing orchestration tools, such as Apache Airflow or AWS Step Functions, allows for the automation of data workflows, scheduling, and monitoring. Automation can also streamline data cleansing and validation processes, ensuring that data is consistently accurate and reliable. By freeing up data engineers from repetitive tasks, organizations can allocate resources to more strategic initiatives.

Ensure Data Quality and Governance

Data quality is paramount to the success of any data pipeline. Implementing best practices around data governance can ensure that the data flowing through the pipeline is accurate, complete, and consistent. This includes establishing data validation rules, implementing automated data quality checks, and maintaining comprehensive documentation of data sources and transformations. A robust data governance framework not only enhances the reliability of insights derived from the data but also fosters trust among stakeholders.

Leverage Advanced Technologies

The emergence of advanced technologies such as artificial intelligence (AI) and machine learning (ML) offers new opportunities for optimizing data pipelines. These technologies can be employed to predict and manage data flows, automate anomaly detection, and enhance data transformations. For instance, machine learning algorithms can analyze historical data patterns to optimize resource allocation dynamically, thereby improving pipeline performance. Integrating AI-driven solutions can lead to more intelligent and adaptive data pipelines that respond to changing demands in real time.

Foster a Culture of Collaboration

Lastly, fostering a culture of collaboration between data engineers, data scientists, and business stakeholders is essential for optimizing data pipelines. Cross-functional teams can offer diverse perspectives, enabling the identification of pain points and opportunities for enhancement. Regular collaboration sessions can facilitate knowledge sharing, encourage innovative ideas, and ensure alignment on data objectives. By working together, teams can create a cohesive strategy that drives the continuous improvement of data pipelines.

In summary, optimizing data pipelines requires a multifaceted approach that encompasses architectural assessments, modular design, and the adoption of advanced technologies. By embracing best practices such as scalability, automation, and continuous monitoring, organizations can enhance the efficiency and scalability of their data pipelines, ultimately unlocking the full potential of their data assets. As the landscape of data continues to evolve, staying ahead of the curve through optimization will provide a competitive edge in the pursuit of insights and innovation.