Have you explored the enigmatic realm of signals and frequencies? If that’s the case, then you may have encountered notions such as jitter vs phase noise. While these concepts may appear intricate initially, there’s no need to worry! In this blog post, we’ll break everything down for you and clarify the distinction between jitter and phase noise in simple terms.

Understanding these phenomena is essential for any tech enthusiast. So let’s jump right in and decode jitter vs phase noise once and for all! Prepare yourself for an illuminating expedition as we uncover the mysteries behind signal quality.

Understanding Jitter

Jitter, the variation in signal timing, occurs when a signal deviates from its ideal periodic waveform. Comparable to a favorite song’s rhythm suddenly becoming offbeat, jitter is introduced by factors like electronic noise, originating from sources such as power supply fluctuations or electromagnetic interference. Clocking errors within devices and transmission impairments in communication systems also contribute to jitter.

The impact of jitter on signals is akin to struggling through a conversation over a poor phone connection, causing garbled words and comprehension difficulties. In digital systems, even small jitter amounts can lead to dropped packets and increased bit error rates, adversely affecting system performance and reliability. Stay tuned for insights into phase noise, another critical aspect of signal quality!

Definition of Jitter

Jitter, a common term in electronics and telecommunications, refers to the variation or fluctuation in signal timing. It represents minor deviations between expected and actual timing, noticeable as disruptions like out-of-sync audio in an online video.

Several factors contribute to jitter, including electromagnetic interference (EMI) from nearby devices and clocking errors within communication systems. The effects of jitter on signals can be significant. In digital communications, it can lead to data errors and packet loss, impacting transmission quality. Analog systems, such as audio applications, may experience audible artifacts like clicks or pops. Overall, jitter degrades performance by reducing reliability and introducing uncertainty into signal timing.

Causes of Jitter

Jitter, defined as the variation in the timing of a signal, can stem from various causes. Clock instability, resulting from factors like component aging or temperature changes, is a common contributor. Electromagnetic interference (EMI) induced by external electrical signals can disrupt signal transmission and introduce timing inconsistencies.

The challenges of high-speed data transmission escalate as data rates increase, leading to potential timing errors. Impedance mismatches on circuit boards or cables may induce reflections and distortions, adding to jitter.

Power supply noise, causing fluctuations in voltage levels, can also elevate jitter levels. Recognizing these causes empowers engineers to implement practical solutions, including filtering techniques, shielding measures, and stable power supplies, minimizing jitter’s adverse effects on signal quality during system design and implementation. Additionally, properly manufactured circuit boards are essential to minimizing the occurrence of jitter in electronic systems. By sourcing components from a reputable store like Kunkune, you can ensure the quality and reliability of your circuit boards.

Effects of Jitter on Signals

Jitter, characterized by variations in signal timing, poses significant challenges to signal quality and overall performance. Its impact includes an increased bit error rate, leading to difficulties in accurate data bit interpretation and subsequent degradation of signal integrity. In applications demanding precise timing, such as high-speed data communication or audio/video streaming, even minor jitter can disrupt synchronization, causing issues like dropped frames or data packet loss.

Furthermore, jitter influences system stability and reliability, introducing unpredictable variations that may lead to essential failures in applications like telecommunications or medical equipment. Analog signals are not immune to jitter’s effects, suffering from distorted waveforms and introduced noise, resulting in degraded signal quality and accuracy.

Recognizing the consequences of jitter emphasizes the need for effective management and minimization strategies. Employing proper design practices and technologies that mitigate or compensate for jitter-induced distortions is essential for ensuring reliable signal transmission without compromising quality or performance.

Understanding Phase Noise

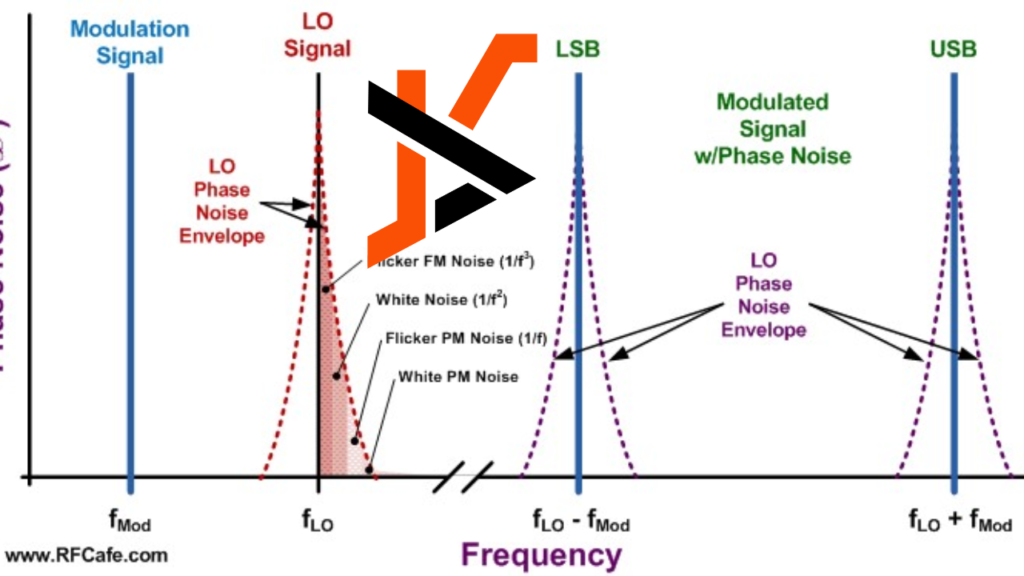

Phase noise, known for its unpredictable changes in signal phase over time, can significantly impact the stability and precision of electronic devices. This phenomenon is often a result of flaws within components such as oscillators and can distort communication systems, resulting in decreased overall performance and reliability.

The consequences of phase noise range from data errors to reduced signal quality and higher bit error rates, making it especially important in applications that require accurate timing, such as wireless communications and radar systems. Specialized equipment like spectrum analyzers is utilized to identify affected frequency ranges and measure their impact to measure and analyze phase noise accurately.

In industries like telecommunications, aerospace, and defense, where reliable communication is important, careful management of phase noise is essential. Engineers play an important role in enhancing system design by addressing both jitter and phase noise issues through the incorporation of mitigation techniques. By proactively tackling these phenomena, they can improve overall performance while minimizing potential problems that may arise.

Definition of Phase Noise

Phase noise refers to random fluctuations in the phase of a signal, indicating short-term variations within a frequency signal. It assesses deviations from the ideal frequency over time due to imperfections in electronic components. Quantified in dBc/Hz, lower phase noise values signify better signal quality and stability. Important in applications requiring precise timing, it impacts telecommunications, wireless communication, radar, and satellite systems.

Engineers leverage phase noise understanding to design efficient and reliable systems, enhancing performance by minimizing timing errors. This insight ensures high-quality signals in critical applications without sacrificing accuracy or effectiveness.

Sources of Phase Noise

Phase noise, a common phenomenon affecting signal accuracy, originates from various sources. Voltage-controlled oscillators (VCOs) contribute due to imperfections and non-linearities, causing phase fluctuations. Thermal noise, resulting from electronic device agitation, adds random frequency fluctuations. Frequency dividers or multipliers introduce jitter and timing errors due to imperfect synchronization.

Power supply noise, electromagnetic interference (EMI), mechanical vibrations, temperature variations, and environmental factors also induce phase fluctuations. Identifying and addressing these sources enables engineers to design low-phase-noise systems. Proper design and component selection mitigate the impact, ensuring optimal signal quality in challenging conditions.

Effects of Phase Noise on Signals

Phase noise significantly impacts signal quality by causing distortion and reducing spectral purity. This phenomenon introduces random phase fluctuations, leading to coherence loss and errors during signal transmission or reception. As phase noise increases, unwanted spurious components emerge, causing interference and signal degradation.

In communication systems, it contributes to higher bit error rates, decreased data throughput, lower modulation accuracy, and reduced receiver sensitivity. Additionally, phase noise affects the precision of oscillators, introducing uncertainty and instability. Managing phase noise is vital across industries, including telecommunications, aerospace, defense systems, wireless networks, audio equipment, and radar systems, to ensure reliable and high-quality signals.

Applications and Importance in Different Industries

- Telecommunications: Accurate synchronization is essential for cellular networks, satellite communications, and internet service providers. Even minimal jitter or phase noise can lead to dropped calls, slow internet speeds, and disrupted communication channels.

- Healthcare: Medical devices, including MRI machines, ultrasound equipment, and heart monitors, require precise timing for accurate diagnoses and treatment outcomes. Excessive jitter or phase noise could compromise patient safety and lead to incorrect readings.

- Aerospace: The aerospace industry relies on effective jitter vs phase noise control for navigation systems in aircraft. Uncontrolled variations could jeopardize flight safety by providing inaccurate guidance to pilots.

- Audio Engineering: Musicians depend on low levels of jitter vs phase noise for high-fidelity recordings during studio sessions or live performances. Disturbances can introduce unwanted artifacts into the sound signal, affecting audio quality.

- Financial Institutions: Highly sophisticated trading platforms in the financial sector rely on ultra-low latency connections to execute transactions rapidly. Fluctuations in signal stability caused by jitter vs phase noise can impact transaction speed and reliability.

Understanding how jitter vs phase noise affects different applications within these industries allows professionals to optimize operations, ensure reliable performance, and address unique challenges in their respective sectors.

Conclusion: Importance of Managing

In conclusion, in today’s digital era, comprehending jitter vs phase noise is essential for maintaining signal quality and reliability. Jitter, the timing variation in a signal, can result from factors like electromagnetic interference, impacting diverse industries. Phase noise, involving random phase fluctuations, arises from thermal effects or electronic component non-linearities, affecting signal quality in applications like wireless communications and radar systems.

Recognizing the interconnection between jitter and phase noise is essential, as underlying phase noise characteristics can induce variations in timing. Specialized equipment is necessary for accurate measurement, and various techniques exist for characterizing these phenomena at different frequency ranges.

Effectively managing jitter vs phase noise is essential for reliable operation in complex communication systems across multiple industries. Implementation of mitigation strategies during design stages, such as using high-quality oscillators and minimizing environmental interference, helps mitigate their detrimental impacts on signal integrity.

Understanding the distinctions between jitter vs. phase noise underscores their influence on signal performance, emphasizing the need for proactive management through meticulous design and testing practices to ensure optimal functionality in our daily technologies.